Explore the crucial role of pagination in collecting complete data

By Hugo Luque & Erick Gutierrez

6 min read | October 21, 2023

When we explore the vast web, it’s common to come across websites that divide their content across multiple pages. This technique is known as ‘pagination.’ For any web scraping professional or enthusiast, it’s essential to understand and properly handle pagination to ensure the complete collection of data.

What is Pagination?

Pagination is a web design technique adopted to divide large amounts of content into individual, more manageable segments or pages. This not only enhances the user experience by avoiding information overload but also optimizes server performance by limiting the amount of data that needs to be loaded in a single request.

Relevance in Web Scraping

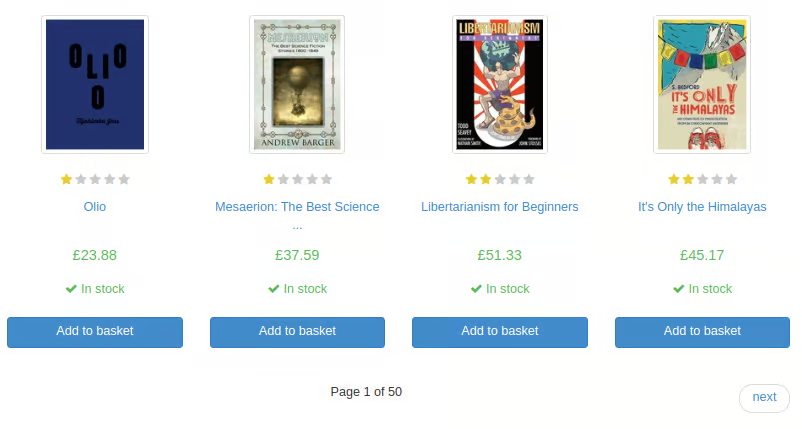

Web scraping aims to gather data from websites, and not considering pagination can lead to the omission of valuable information. Imagine an e-commerce website that lists thousands of products across hundreds of pages. If you only scrape the first page, you would miss most of the products

Common Challenges in Pagination Handling

-

Changes in URL Structure: Not all sites maintain a consistent URL structure for pages. Some may use simple structures, while others may rely on complex parameters or temporary tokens.

-

Infinite Pagination: A growing trend, especially in blogs or media sites, is infinite pagination, where new content loads as you scroll down. These require a different and often more advanced scraping technique.

-

Request Limits and Blocks: Scraping multiple pages of a website in rapid succession can trigger site defense mechanisms, resulting in temporary or permanent blocks.

-

Data Consistency: As you navigate through pages, you may find that the structure or data can vary from one page to another, requiring your script to be adaptable.

Tips to Overcome These Challenges

-

In-Depth Analysis: Before you start, study the website in-depth. Understand how URLs change, how data is loaded, and any other peculiarities that may influence your scraping.

-

Diversify Your Tools: Instead of relying on a single tool, become familiar with several. Some websites that resist scraping with one tool may be more accessible with another.

-

Implement Delays: By adding delays between your requests, you reduce the risk of being detected as a bot and, consequently, being blocked.

-

Stay Updated: The world of web scraping is dynamic. What works today may not work tomorrow. Join communities, follow blogs, and stay up-to-date with the latest techniques and best practices.

Usage Examples

-

Scrapy: It is a robust framework for web scraping, and handling pagination is relatively straightforward thanks to its built-in capabilities.

Example: Let’s say you’re scraping a blog that lists its articles across multiple pages.

import scrapy class BlogSpider(scrapy.Spider): name = 'blogspider' start_urls = ['http://example.com/blog/page/1'] def parse(self, response): # Extract content from the current page for article in response.css('div.article'): yield {'title': article.css('h2.title ::text').get()} # Follow the link to the next page next_page = response.css('a.next-page::attr(href)').get() if next_page is not None: yield response.follow(next_page, self.parse)In this example, after extracting the content from a page, Scrapy looks for a link to the next page and follows that link to continue scraping.

-

BeautifulSoup4: It is a library that makes it easy to parse HTML and XML documents. However, for navigating between pages, it’s often combined with the Requests library.

Example: Assuming the same blog as before.

import requests from bs4 import BeautifulSoup URL = 'http://example.com/blog/page/' while True: response = requests.get(URL) soup = BeautifulSoup(response.content, 'html.parser') # Extract content from the current page for article in soup.find_all('div', class_='article'): print(article.find('h2', class_='title').text) # Check if there is a next page next_page = soup.find('a', class_='next-page') URL = next_page['href'] if next_page else None if not next_page: break -

Selenium: It is a tool that allows you to automate web browsers, making it ideal for websites with JavaScript-based pagination.

Example: Assuming the same blog as before.

from selenium import webdriver from selenium.webdriver.common.by import By from selenium.common.exceptions import NoSuchElementException driver = webdriver.Chrome() driver.get('http://example.com/blog/page/1') while True: # Extract content from the current page articles = driver.find_elements(By.CSS_SELECTOR, 'div.article') for article in articles: print(article.find_element(By.CSS_SELECTOR, 'h2.title').text) # Try following the next page link try: next_button = driver.find_element(By.CSS_SELECTOR, 'a.next-page') next_button.click() except NoSuchElementException: break driver.quit()In this example, Selenium not only extracts the content from the page but also clicks the “Next” button to load the next page.

Conclusion

Pagination is one of the many facets of web design that can pose unique challenges for web scrapers. However, by understanding its nature and learning to navigate pages properly, we can ensure the collection of complete and accurate data.

Like any other aspect of web scraping, handling pagination requires patience, adaptability, and a deep knowledge of the available tools. We should not underestimate the importance of being prepared and adapting to variations between websites.

If you want to delve deeper into advanced web scraping techniques and how to apply them in real-world scenarios, Contact Bitmaker today, their customized web scraping solutions, and continuous monitoring will empower your business with the data-driven insights necessary to make informed decisions.

At Bitmaker we want to share our ideas and how we are contributing to the world of Web Scraping, we invite you to read our first technical article Estela’s Year-One Transformation